Online Learning Hyper-Heuristics in Multi-Objective Evolutionary Algorithms

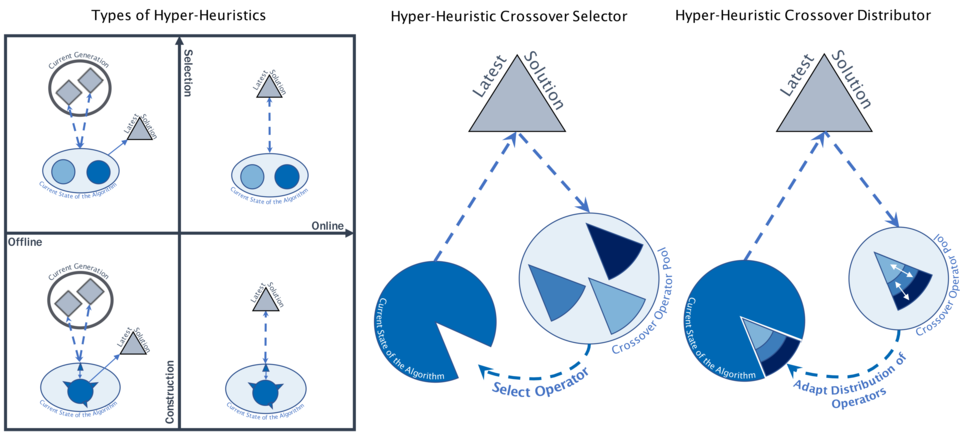

In a world of rising complexities, optimization problems become more diverse, dynamic and sophisticated. To find solutions for those problems, more algorithms and algorithm components are developed. A new challenge emerges, which is the selection of the best algorithm. An approach to this are the so called “Hyper-Heuristics”, top-level optimization algorithms, that select the best bottom level optimization algorithm for one problem from a selection pool. Hyper-Heuristics can be categorized in a system with axes from “Construction” to “Selection” and “Offline” to “Online”. We apply a Hyper-Heuristic to NSGA-II to select the crossover operator. Our approach is therefore in the middle of "Selection" and “Construction”. We employ an online rewarding system to score the different operators during the optimization procedure.

Those scores are applied in two different selection mechanisms:

- Hyper-Heuristic Crossover Selector: one operator per generation, with probability depending on scores

- Hyper-Heuristic Crossover Distributor: all operators, but with a partition according to the scores

From our experiments with those selection mechanisms, we developed a new version that combines those approaches and achieved promising results and terms of generalizability of the crossover operator selection. Have a look at our full paper for more details.

More details can be found here:

- Julia Heise and Sanaz Mostaghim

- Online Learning Hyper-Heuristics in Multi-Objective Evolutionary Algorithms

- EMO 2023: Evolutionary Multi-Criterion Optimization pp 162–175, March 2023, https://doi.org/10.1007/978-3-031-27250-9_12